Programming has evolved from hand-toggling machine instructions to orchestrating complex systems with AI-assisted tools, reshaping how software is conceived, built, and maintained. This journey spans early theoretical algorithms, high-level abstractions, the web revolution, and today’s AI-accelerated development workflows.

The earliest sparks

Long before electronic computers, Ada Lovelace outlined an algorithm for Babbage’s Analytical Engine, often cited as the first expression of programming logic in the 1840s. This conceptual foundation foreshadowed later leaps, proving that instructions could be systematically designed to manipulate symbols beyond mere arithmetic.

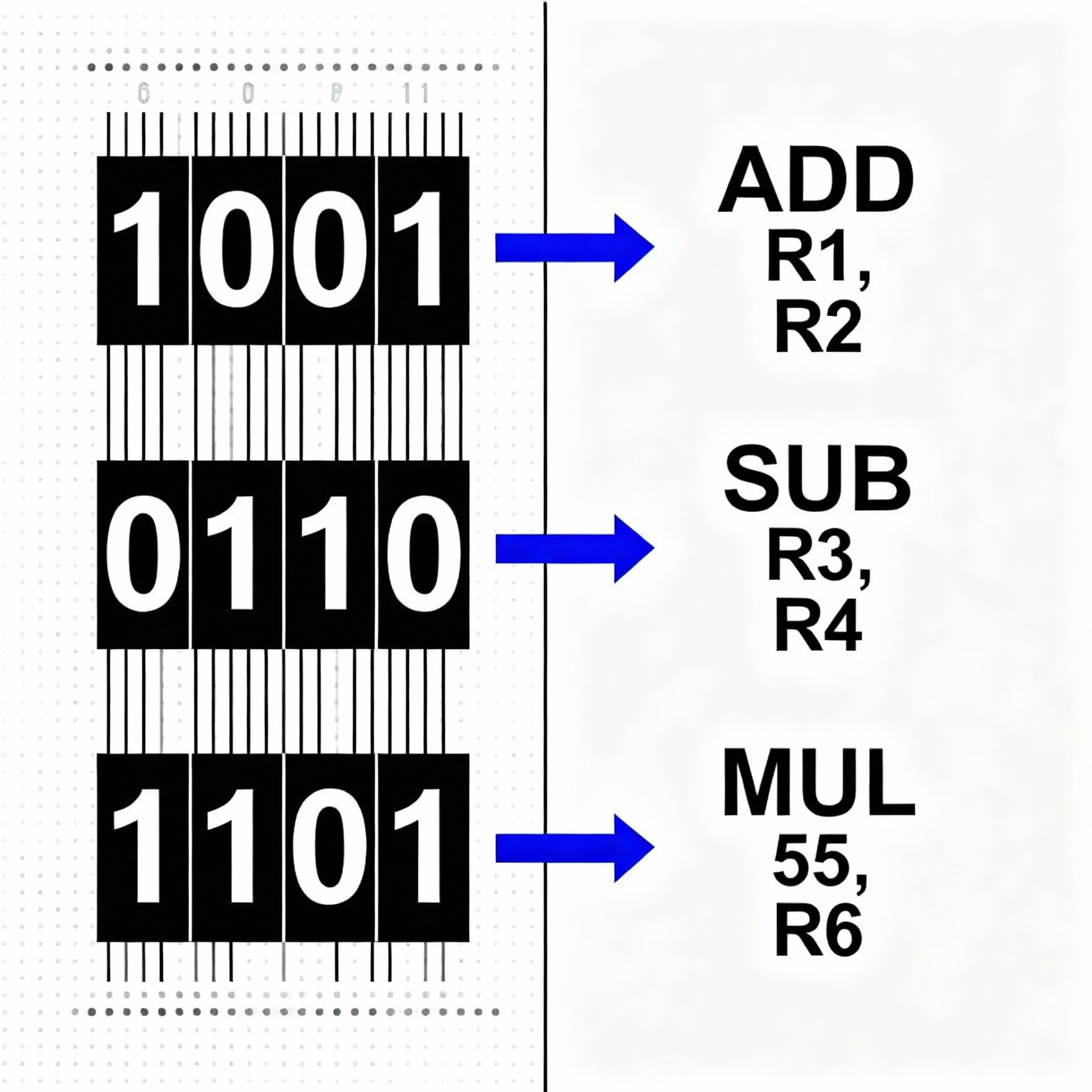

From machine code to mnemonics

Early programming meant direct manipulation of machine code and punch cards, error-prone and arduous for complex tasks. Assembly language in 1949 introduced mnemonics over numeric opcodes, slightly improving readability while maintaining tight control over hardware.

High-level languages emerge

The 1950s brought compiled high-level languages, most notably FORTRAN (1957) for scientific computing and COBOL (1960) for business, ushering in portability and productivity at scale. These abstractions began decoupling programmers from hardware details, enabling broader adoption across industries.

Structured programming breakthrough

By the 1960s–70s, structured programming and languages like ALGOL and C emphasized clear control flow, modularity, and portable systems programming. C (1972) became a bedrock language, influencing C++, Java, and many modern toolchains while powering operating systems like UNIX.

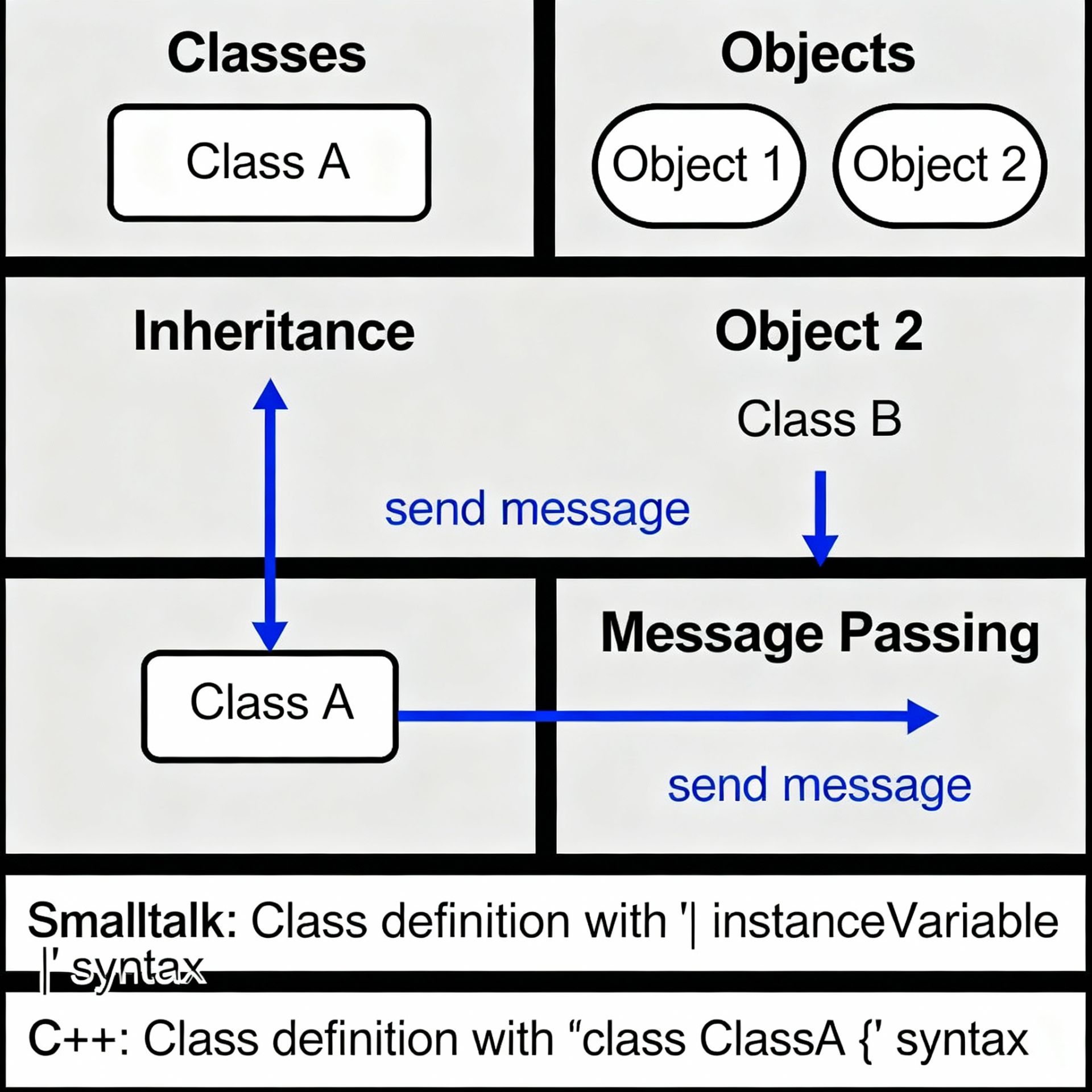

Object-oriented thinking

The 1980s popularized object-oriented programming with Smalltalk and C++, modeling systems as interacting objects to manage complexity and promote reuse. This paradigm shaped mainstream engineering practices, architectural patterns, and large-scale application design for decades.

The web and scripting wave

The 1990s transformed software distribution and interaction: Java promised “write once, run anywhere,” while JavaScript enabled dynamic web pages and client-side interactivity. Python’s readability and batteries-included philosophy broadened programming to education, data, and automation, setting the stage for today’s data-driven era.

Open source and safety

The 2000s–2010s saw the rise of C#, modern managed runtimes, and later Swift for safe, performant app development on Apple platforms. Rust’s focus on memory safety without garbage collection redefined systems programming trade-offs, inspiring a wave of secure-by-construction tooling and practices.

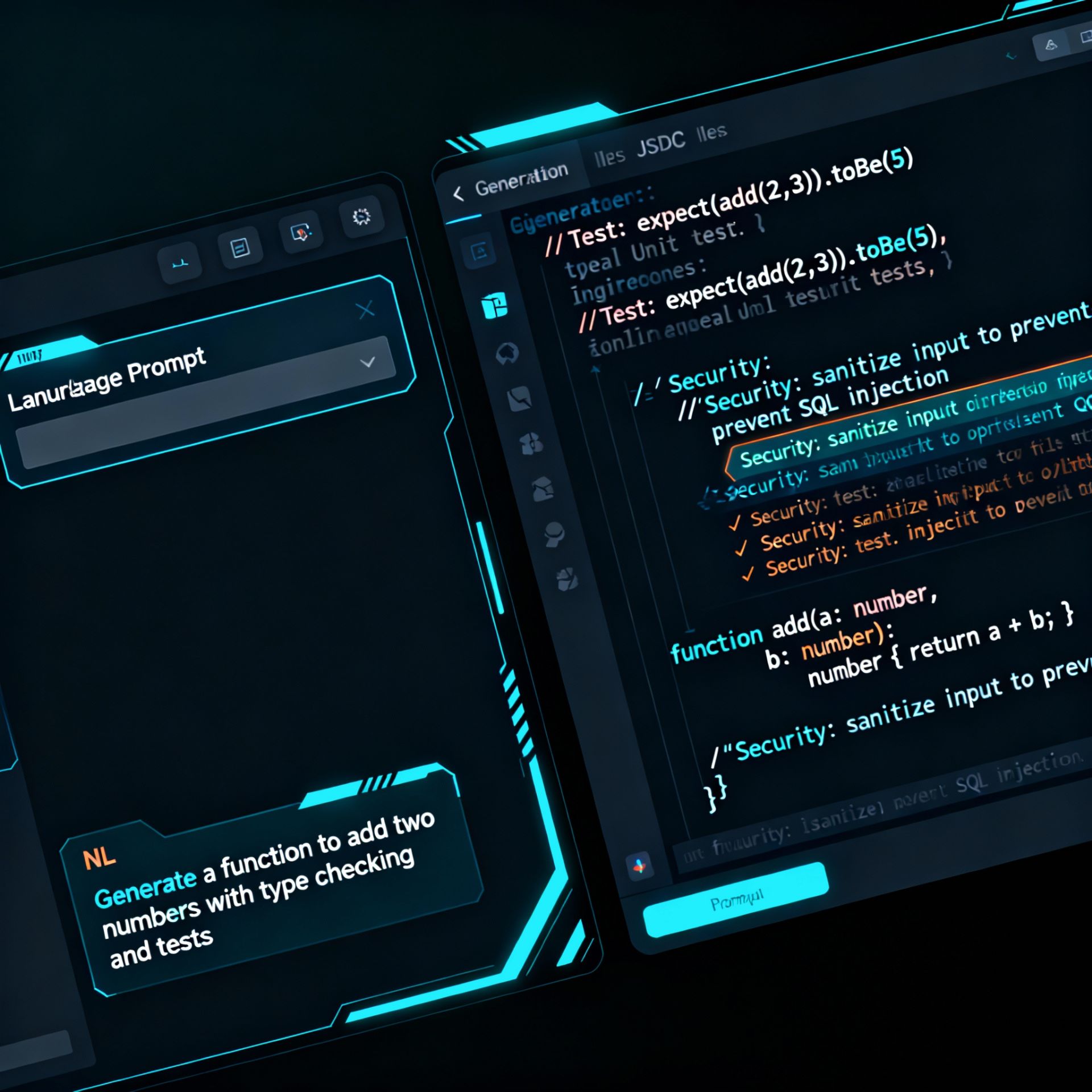

The AI-assisted era

By the mid-2020s, AI coding assistants began reshaping workflows, offering code suggestions, refactors, and inline explanations, with adoption reaching a majority of developers. These tools accelerate boilerplate, improve code review and testing, and hint at natural-language programming interfaces becoming part of daily development.

Why it matters now

Each milestone traded direct hardware control for higher abstractions, multiplying developer impact and reducing cognitive load across domains. The current shift toward AI-assisted development continues this arc, augmenting engineers rather than replacing them, and reallocating effort toward architecture, quality, and product thinking.

A concise timeline

1840s: Ada Lovelace’s algorithm for the Analytical Engine establishes programmable computation as an idea.

1949: Assembly brings mnemonic instructions, easing work over raw opcodes.

1957–1960: FORTRAN and COBOL broaden scientific and business computing with high-level syntax.

1972: C standardizes portable systems programming and influences generations of languages.

1980s: Smalltalk and C++ popularize object orientation for large-scale software.

1990s: Java, JavaScript, Python power cross-platform apps, the browser, and general-purpose productivity.

2010s: Swift and Rust emphasize safety, performance, and modern tooling ergonomics.

2020s: AI-assisted coding integrates into IDEs and CI, accelerating development lifecycles.

What’s next

Expect deeper natural-language interfaces, more robust automated debugging, and real-time optimization as assistants mature. As AI expands language coverage and context awareness, collaborative platforms will fine-tune models on domain codebases while elevating security and compliance in the SDLC.

Practical takeaways

Embrace polyglot stacks: choose languages for domain fit—systems with Rust, cross-platform backends with Java/Kotlin, data with Python.

Integrate AI tools thoughtfully: treat suggestions as starting points, enforce code review, tests, and security scanning to maintain standards.

Prioritize safety and maintainability: adopt modern language features, linters, and memory-safe paradigms to reduce latent defects.

Programming’s evolution is a steady climb up the abstraction ladder, and AI is the latest rung—augmenting human judgment while compressing the distance from idea to reliable software.